Making Sense of AI Risk Scores

Chapter 1 in our AI Accountability & Transparency series (By Sarah H. Cen, James Siderius, Cosimo L. Fabrizio, Aleksander Madry, and Martha Minow)

AI is increasingly used to make decisions. Typically, an AI system provides information that a human uses to then make a decision or perform a task. In this first post of our AI Accountability & Transparency series, we explore how the information that AI provides can be ambiguous. We discuss how this ambiguity can lead to problems of liability in AI as well as the benefits that AI specifications (a minimal description of an AI system, akin to the instructions and warnings that come with electronic devices) can provide.

Let’s say you are subject to an AI-driven decision. What role did AI play in the process?

Typically, an AI-driven decision tool outputs what is known as a “risk score” that is used to make a final decision. For example, AI-driven decision aids in lending provide banks with risk scores that are intended to capture the likelihood that each applicant would default on a loan. In criminal justice, a risk score might assist a judge in bail determinations by estimating the likelihood that a defendant would fail to appear in court if granted bail. Or a potential employer may use an AI-driven hiring tool that predicts an applicant’s success at the company, if hired.

It turns out that the meaning behind a risk score—and most of the numbers provided by AI-driven decision tools, for that matter—is not only unclear to the end user, but also to most technologists, even AI designers themselves. This ambiguity creates confusion that can become problematic, especially if AI-driven tools continue to be deployed in increasingly consequential decision contexts.

In this post, we explore the various interpretations of risk scores, a candidate (though brittle) solution, and an alternative (more promising) remedy.

What is a risk score?

Let’s start off by looking at risk scores that are intended to capture some notion of probability. (Although our discussion extends to various flavors of risk scores, probabilistic risk scores are widely used in AI-driven decision tools and provide a good case study for AI-driven outputs.)

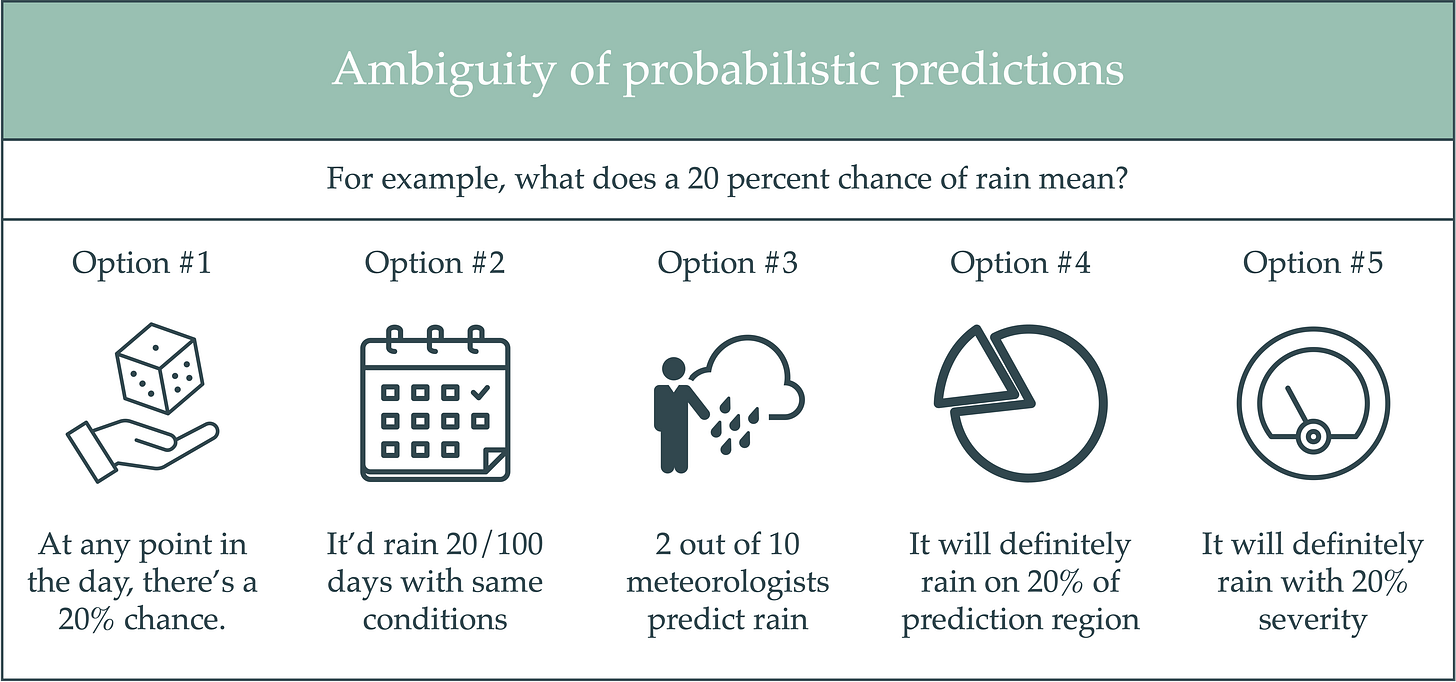

To illustrate the many possible interpretations of probabilistic risk scores, consider a toy example in which a weather app informs you that there is a 20 percent chance of rain in Boston today. What does “chance” mean in this context? There are several possibilities:

At any given time during the day, there is a 20 percent chance that it will rain, i.e., it will rain approximately 20 percent of the day.

Given 100 days with the same meteorological conditions, it would rain on 20 of them.

Out of 10 meteorologists, 2 of them said it would rain.

It will definitely rain but only in 20 percent of the greater Boston area.

It will definitely rain, and 20 percent characterizes the severity of the rainfall.

This (non-exhaustive) list of interpretations demonstrates that even a concept we turn to everyday can have an ambiguous meaning.1 And we would likely behave differently depending on the interpretation that we take. Indeed, you may not normally care about getting caught in the rain, but suppose that you are dressed up for an important event. Then, you may wish to bring an umbrella if you believe in interpretation E, and you may forgo it if you believe in C.

To stress this point further, let’s examine a scenario with higher stakes. Suppose that a company that you’d like to join uses an AI-driven hiring tool to decide whether to offer you a job, and the AI-driven risk score predicts that the likelihood you will succeed at the potential job—based on your credentials and past experience—is 20 percent.

In this scenario, the meaning behind “20 percent” is even less clear than it is in a weather forecast. For example, you might ask: Is the source of uncertainty due to my capabilities or due to factors that are beyond my control (e.g., I majored in chemistry, and the only other chemistry major who previously worked at this company did not perform well)? If some of the uncertainty is due to factors beyond my control, should it really be reflected in my risk score? Should the two types of factors—local and systemic—be communicated to my employer? Should I at least get to address the concerns of company fit that arise from my major?

That different interpretations may elicit different decisions is precisely the reason understanding risk scores is so critical, especially when the stakes are high. In the hiring scenario, most applicants get only one chance at a particular job—it’s a non-repeatable event. Misunderstanding an AI-driven tool’s risk score can therefore cost a highly qualified applicant the job. While a one-off rejection is undesirable, it might not be irreparable. However, if multiple employers use the same hiring tool, then that highly qualified applicant could face rejection at every turn.

Optimizing human + AI decisions

On top of the fact that the meaning behind a risk score may be ambiguous, different people may interpret the same risk score differently. It is well known, for instance, that a human’s understanding of a probability depends on its presentation. Humans interpret “70 percent” as less likely than “7 out of 10,” even though the two amount to the same mathematical probability. Similarly, people often prefer a medication whose reported effectiveness is 97 percent to another medication that has an effectiveness of 96.9 percent, even though the first medication’s effectiveness could have been rounded up from 96.5 percent (note that 97 is distinct from 97.0 percent).

To address such idiosyncrasies in interpretations, a natural idea would be to optimize the decisions that arise from the composition of an AI and a human. That is, if the goal of an AI-driven tool is to achieve a desired outcome—for example, to minimize the chance of a person using a weather tool from getting caught in heavy rain without an umbrella—then perhaps the AI-driven tool should be adjusted to encourage the human to ultimately make decisions that match this goal. The underlying principle is to optimize the outcomes of the joint system: human + AI. (The notion of an “optimal” outcome may vary across contexts—it could be to maximize accuracy, minimize false negatives, and so on.)

Typically, the AI is adjusted to the human. For example, suppose that an AI-driven tool learns that employer X underestimates the credentials of female applicants and overestimates the credentials of their male counterparts. In order to correct for the employer’s slant and maximize overall accuracy, the AI could artificially inflate the scores of female applicants, and vice versa.

Is optimizing human + AI decisions the answer?

Optimizing the joint human + AI system seems like a good solution. After all, it avoids answering the question “What is a risk score?” by focusing not on how the AI communicates information to the human, but on the final outcome instead. In many contexts, this approach makes a lot of sense. It is not, however, an end-all-be-all solution.

There are several reasons optimizing the human + AI decision might be insufficient. Primary among them is the fact that this approach obfuscates rather than clarifies. The AI is being designed to sway (some would say, manipulate) the final decision-maker, often without their knowledge.

Let’s consider a specific scenario. You go to the doctor with a set of symptoms. It turns out you have a condition that can be resolved with two possible courses of treatment. Option #1 is a strict regimen of diet and exercise. Option #2 is surgery. An AI-driven treatment tool takes in your medical history and produces a risk score that represents the probability your condition will worsen if you do not get surgery. There are three noteworthy details. First, your doctor typically leans against surgery. Second, most patients are unable to uphold a strict regimen of diet and exercise. Third, there has recently been a long string of surgery-averse patients, which leads the AI to believe that your doctor does not fully communicate the risks of Option #1. For these reasons, the AI—which is optimizing the human + AI outcome—decides to inflate your risk score from 0.7 to 0.9, which the doctor then communicates to you.

Do we like this outcome? Perhaps. It accomplishes the goal of impressing upon you and your doctor that Option #2 must be seriously considered. However, it obfuscates your true risk score. You may leave the doctor disheartened that your only option is surgery because, without it, your condition will almost certainly worsen. With more information (e.g., that Option #1 is promising as long as you stick to it), you and your doctor could make a more reasoned, informed decision.

There are other reasons that, on its own, optimizing the joint human + AI system is not an end-all-be-all solution, including:

Calibrating an AI to a human can be brittle. For instance, suppose a doctor may wish to suddenly change their behavior based on a new study. An AI-driven tool of the form described above would take time to recalibrate, while a doctor informed of the reasons behind the AI’s risk score could immediately incorporate the study’s new findings.

It introduces different versions of reality. Personalized AI systems expose different users to different versions of reality. While one doctor (whose AI inflates risk scores) may believe that certain symptoms are highly concerning, another (whose AI deflates risk scores) may believe that the same symptoms are normal.

It hurts long-term trust and credibility. Once a user figures out that their AI assistant might be compensating for the user’s cognitive tendencies rather than delivering its originally predicted risk score, the user may lose faith in the AI assistant’s recommendations.

Specifications can reduce ambiguity

Our driving concern has been that risk scores are ambiguous concepts, both to users and technologists, which can give rise to undesirable outcomes (e.g., the incorrect use of the AI-driven tool). We just saw that a potential solution—one that took a technological approach—does not resolve the problem on its own. So, what other options are there?

We suggest that AI-driven products come with detailed specifications, i.e., a minimal but thorough product description that allows the end user to integrate AI-driven recommendations in an informed and responsible manner. For example, one could mandate that system designers:

State the inputs to the system and their meanings;

State the outputs of the system and their meanings;

Disclose characteristics of the data on which the system is trained (e.g., time frame of data collection, origins of the data, etc.);

Disclose the main modules of the system (e.g., one module generates candidate treatments for a hospital patient based on their symptoms, the second module predicts the suitability of each treatment based on the patient’s medical history, and so on);

Describe how the output is generated from the data (e.g., the objective function); and

Alert the user every time the AI-driven tool is altered or updated.

The list above is not exhaustive and certainly not suitable for every AI decision context. The appropriate specification requirements may vary across industry and use case. While there could be other challenges introduced with the disclosure of specifications, including accessibility of the information and whether the typical user possesses the necessary background to synthesize it, standardizing specifications would be an important first step in the transparency of AI decision-making.

Specifications serve important functions for both the designer and user. They allow the user to make more informed decisions. The user can better assess how to use the AI-driven tool appropriately and, occasionally, whether the AI tool is even suitable for the user’s needs (e.g., given detailed specifications, users of the popular algorithmic healthcare tool studied by Obermeyer et al. may have been able to identify and correct the algorithm’s biases early).2 On the other end, specifications also encourage system designers to revisit their system design and take heed of precisely how their system operates. In addition, specifications help to delineate the liability accepted by designers versus that accepted by users.

Takeaways

Risk scores—and more broadly, the outputs of AI-driven decision aids—often have ambiguous meanings. The meaning behind a risk score is unclear not only to the end users, but also to the system designers themselves. The outputs of many popular deep neural networks, for instance, claim to be “probabilities” simply because they fall between 0 and 1, but there is little evidence that the output represents a probability in any verifiable, formal sense.

In this post, we investigated this ambiguity in depth and briefly walked through a potential, technological remedy (calibrating AI systems to their users). We concluded, however, that this method cannot stand alone. As a complementary endeavor, we suggested that AI-driven decision aids must be accompanied by specifications—a minimal yet thorough description of the system that allows the end user to make informed decisions. A designer could be required, for instance, to clearly state the system’s inputs and outputs as well as characteristics of the data on which the system was trained. Although not a panacea, specifications offer multiple benefits, including increasing user agency, encouraging system designers to carefully consider their design choices, and clarifying questions of liability.

Dawid, Philip. "On individual risk." Synthese 194.9 (2017): 3445-3474.

Obermeyer, Ziad, et al. "Dissecting racial bias in an algorithm used to manage the health of populations." Science 366.6464 (2019): 447-453.