Auditing AI: How Much Access Is Needed to Audit an AI System?

Chapter 2 in our AI Accountability & Transparency series (By Sarah H. Cen, Cosimo L. Fabrizio, James Siderius, Aleksander Madry, and Martha Minow)

How do we ensure that an AI system complies with the law or even does what it claims to do? Checking whether an AI system satisfies certain predetermined criteria falls under the umbrella of AI auditing. Although many agree that auditing is necessary, precisely which components of the AI system should be audited remains unclear. For instance, what can an auditor deduce with access to an AI system’s training data? In this post, we examine four types of information that an auditor could access, discussing the benefits and drawbacks of each.

AI has become an integral part of our modern world. It powers the algorithms behind our social media feeds, assists medical professionals in making diagnoses, offers companies insights on potential hires, and even crafts essays and artwork. Although AI adds a lot to our lives, it will undoubtedly cause unforeseen harm. And when it does, holding AI developers and users accountable can not only help us rectify wrongdoing, but also help to shape future practices and prevent future harm.

To hold AI developers accountable, there must be a way to evaluate—or audit—AI systems. In the absence of external audits, AI systems are only tested internally, without independent oversight. At the same time, companies are unlikely to provide external auditors unfettered access to their AI systems, as doing so would mean giving up trade secrets, losing their competitive advantage, and inviting scrutiny of company practices.

To standardize auditing procedures, we must ask: What is the minimal amount of access needed to audit an AI system? In other words, what might we need companies to disclose or share about their AI systems for auditing purposes?

Answering these questions matters. For one, understanding the minimal amount of access needed to run an effective audit allows us to set realistic expectations. That is, we should not require transparency for transparency’s sake—demands for transparency should be informed by a purpose that guides how much (and how little) access is needed. For another, answering these questions can help to shape regulatory requirements as well as industry standards.

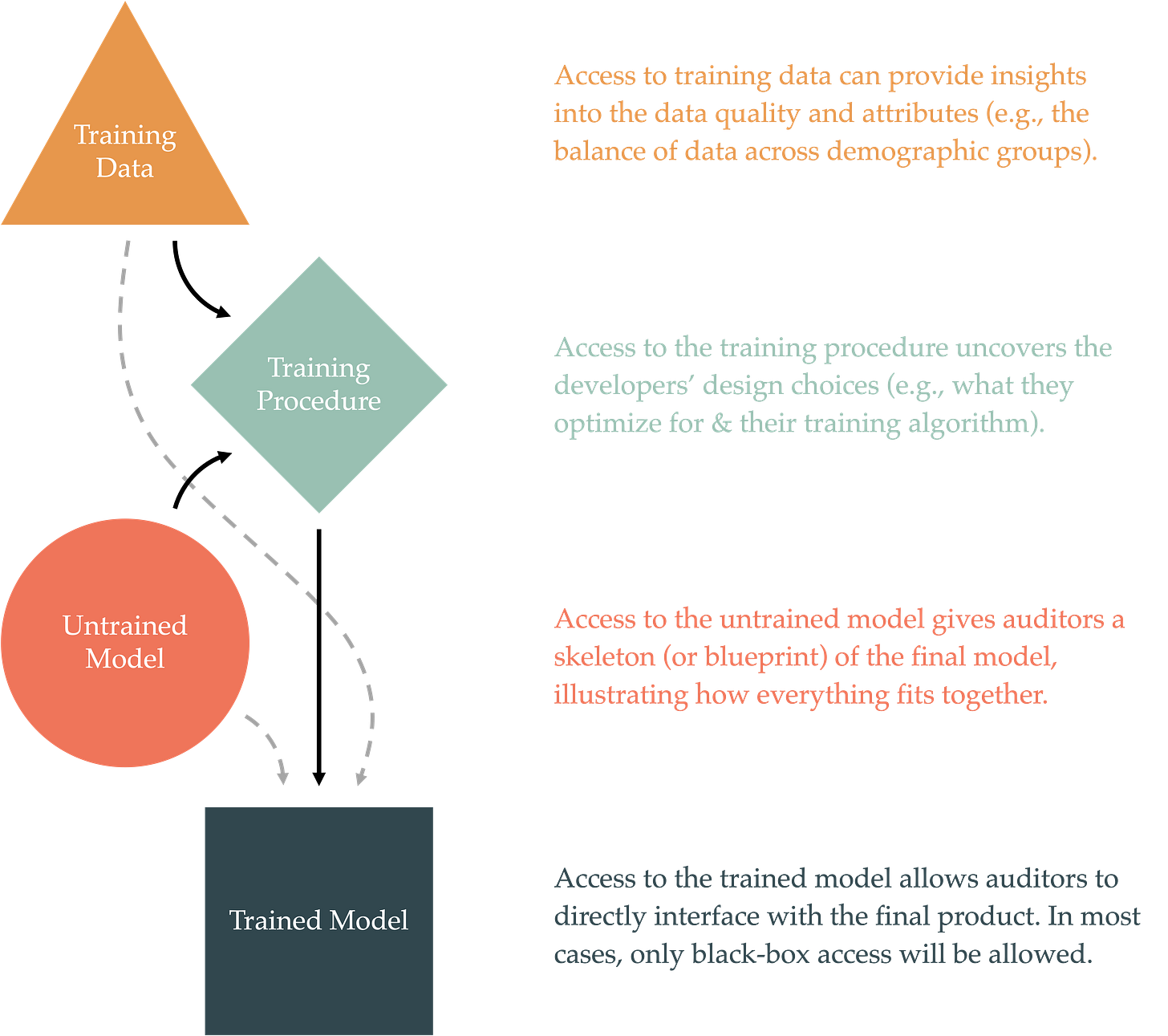

In this post, we discuss four possible variants of access requirements for auditing purposes:

Access to the training data,

Access to the training procedure,

Access to the untrained model, and

Access to the trained model.

Together, these four variants cover every part of the AI pipeline. Given all four types of access, an auditor would be able to recreate the AI system in its entirety (an outcome AI developers would like to avoid).1 Below, we’ll explore the benefits and downsides to each of these four variants as well as some of their combinations.

Option 1: Access to Training Data

Training data are the building blocks of data-driven algorithms. Because data-driven algorithms learn and reflect patterns that appear in the training data, poor-quality data generally produce poor-quality results. As they say, “garbage in, garbage out.”

Given the significant influence that training data has on an AI system, many have called for companies to release their training data, raising the question: Would access to an AI system’s training data help to audit it and ensure that it meets the desired criteria?

In short, no. There are many possible ways that training data can be utilized. For example, let’s say we’re interested in whether or not an AI system that helps employers decide who to hire discriminates on the basis of gender or race. It is difficult to make such a determination from the training data alone. Both discriminatory and non-discriminatory AI systems can arise from the same training dataset, depending on how that dataset is utilized in the AI pipeline. At best, one may be able to discern the set of “likely” models that may emerge from a dataset.

There are, however, some benefits to providing auditors with data access. Training data can provide insights into a company’s practices and priorities. Whether or not a company cleans their data—makes sure that it is sufficiently high quality—or ensures that their dataset is balanced can be easily determined from the training dataset. While strict requirements on the dataset are often brittle (e.g., there are always loopholes to bright-line rules, like strict threshold requirements, because developers can typically find a way around them), data disclosure requirements may offer a helpful alternative.

Specifically, one can mandate that AI developers disclose and justify their data acquisition and cleaning procedures. One could also mandate that AI developers disclose characteristics about their data, such the dataset’s balance (e.g., across demographic groups) as well as its provenance (e.g., how each sample was obtained). Although disclosure requirements are not value-based guidelines (i.e., they do not specify what behavior is “right” or “wrong”), they encourage AI developers to exhibit a certain level of care with their data. In this way, information disclosures are relatively value-agnostic, which allows them to remain relevant even when we change our minds about what is “acceptable.” They also prove useful for accountability reasons beyond audits (e.g., disclosures provide information that may be relevant for contestation).

In sum, one cannot definitively determine whether an AI system satisfies certain characteristics from the training data alone. However, data disclosures can encourage good practices and prove useful for downstream accountability.

Option 2: Access to Training Procedures

One can alternatively request access to an AI system’s training procedure. By “training procedure,” we mean the high-level steps that the AI developer took in order to produce the final, trained model. As simple examples, auditors could require that AI developers describe the broad class of models that they chose (e.g., transformers or decision trees), the objective functions that they optimize (e.g., the factors that a social media algorithm optimizes in its pipeline), and the algorithm that they applied on the chosen model in order to achieve the desired objective (e.g., stochastic gradient descent or CART), and other training information (e.g., the amount of training resources).

Like the training data, the training procedure is a formative factor in AI development, but it alone is unlikely to reveal much about the final (trained) model. After all, vastly different models can emerge from the same training procedure, depending on how the model is initialized, characteristics of the training data, and so on.

At the same time, access to an AI system’s training procedure—which includes anything from the developers’ design decisions to the quality checks they run—can permit an auditor to run simple sanity checks. Auditors can, for example, alert AI designers to issues in their pipeline that the designers overlooked or even diagnose the cause of undesirable system behavior. Mandating that AI developers disclose, describe, and justify (however briefly) their objective functions may also nudge designers to consider their choices with greater care.2

While training procedure disclosures can offer a wealth of information to the auditors, they should be used judiciously. Indeed, of the four possible disclosures discussed in this post, this option reveals the most in terms of intellectual property—it’s more likely than the other three to uncover trade secrets.

Option 3: Access to the Untrained Model

A third option is to obtain access to the untrained model, i.e., the specific model that is eventually trained, without the training data or the training procedure. This option is similar to what Twitter did recently, when they open-sourced their “recommendation algorithm.”

We can think of an untrained model as the skeleton of the final model, e.g., the neural network architecture or the exact decision tree used by the AI system under consideration. This type of access would tell us what type of data an AI system takes in as inputs, what type of information it outputs (e.g., a number between 0 and 1), how different parts of the model fit together, what role each part plays, and so on. In a sense, the untrained model is the “form” of the AI model, including its inputs, outputs, and general structure.

There are varying degrees of access to an untrained model, from the exact model to a general blueprint. As with the training data and training procedure, an untrained model can yield many potential AI systems, so an auditor cannot determine how an AI system behaves from the untrained model alone. Access to the untrained model therefore protects the AI developer because, depending on how detailed the disclosure, it does not necessarily sacrifice much in terms of trade secrets (which is perhaps one of the reasons that Twitter released their algorithm). Moreover, depending on the technical fluency of the auditor, an untrained model may be too opaque to be helpful.

Still, an untrained model arguably provides some of the most interpretable insights into an AI system design. For example, the open-sourced Twitter algorithm reveals that Twitter now sources half of a user’s content from in-network Tweets (i.e., from accounts that the user follows) and the other half from out-of-network Tweets (whereas it previously allowed users to choose how often they saw Tweets from accounts they do not follow).

As a consequence, untrained models can enable the auditors to pinpoint glaring flaws or identify discrepancies between a company’s claims and its actual implementation. An untrained model can also reveal implicit assumptions that an AI system adopts that should be disclosed to users of that system.

On the other hand, granting access to untrained models may be a red herring. Although it is in the spirit of increasing transparency and encouraging competition, not much can be determined from an untrained model alone. In terms of encouraging competition, even if a model is open-sourced, training a large model involves enormous resources, which means that requiring open-sourcing untrained models may be inconsequential for large companies (who know that very few competitors have the same access to resources that they do) but pose a major threat for small companies (whose models are smaller and easier for large companies to imitate).

Option 4: Access to the Final Model

The final option is to provide access to the final, trained model. This option is particularly appealing because an auditor can directly test and probe the end product. That is, they can interface with the same technology that is ultimately deployed. Unlike all the previous options, access to a trained model allows an auditor to unambiguously determine how the model would behave under different conditions.

There are two versions of providing access to the final model: (i) providing black-box access to the trained model or (ii) disclosing the end product in its entirety (e.g., the model weights):

Black-box access to a trained model allows an auditor to observe the model's outputs (i.e., its behavior) for any inputs of the auditor’s choosing. An auditor can, from black-box access alone, test whether an AI system satisfies certain criteria of interest, from how well it performs on an outlier population to whether it has disparate impact on different races.

Access to the end product in its entirety—which strictly subsumes black-box access and for which there is precedent in other domains, such as the automotive industry—provides the auditor with significantly more information. An auditor could, for example, take gradients with respect to various inputs of interest (a technique that has been used to gain insight into the “logic” behind an AI model).

Although full access to the trained model is appealing to auditors, it can disincentivize AI innovation. Even if an AI developer is only required to release their trained model to an auditor privately (rather than open-source it), there is an outstanding fear that they will lose their competitive advantage if important information is leaked. It may therefore be too much to ask that every AI developer grant full access to their model.

Plus, in many cases, black-box access is more than enough. One can think of black-box access as an auditor being able to crash test a car whereas an auditor with full access would also be able to inspect every component of the car. Black-box access would allow an auditor to test how the model behaves end-to-end without necessarily requiring that the auditor be technically proficient (which is often needed if an auditor wishes to leverage the full access option). Note, however, that if auditors take route (i) instead of (ii), it might be appropriate to cap the number of queries since any trained model can, in theory, be fully reconstructed from infinite black-box queries.

Compared to the other three options discussed in this post, this approach is outcome-focused. It does not care about an AI developer’s intention, philosophy, or technique. It ignores the means used to obtain an AI system, concentrating solely on the ends. It assesses an AI model based on its end-to-end behavior. In a way, this feature is desirable. Indeed, even if AI developers curate a pristine training dataset, their AI model can still produce poor results, which only becomes apparent with access to the trained model. On the flip side, access to the trained model but nothing else is not always satisfactory from a broader accountability perspective. An individual contesting an AI-driven decision may, for instance, wish to understand how that decision came about—to trace the choices an AI developer made that led to the decision.

Which Option is Best?

Of the options described above, the fourth—access to the trained model—provides an auditor with the greatest flexibility with the least amount of ambiguity. That is, an auditor can test a model based on various criteria (e.g., to determine whether it satisfies a property known as calibration) and remain confident that their findings pertain to the specific AI system of interest. In contrast, an auditor cannot definitively say whether an AI system satisfies, for example, calibration from the training data, training procedure, or untrained model alone.

Still, none of the four options stands above the rest in every way. The first three options serve as useful sanity checks, speak to the intentions of an AI developer, and encourage AI developers to adopt good practices. Moreover, they can ensure that other accountability mechanisms are achievable—for example, an individual contesting an AI-driven decision can cite poorly cleaned data (as provided by Option 1) or an overly simplistic objective function (as provided by Option 2) to argue that the AI-driven decision is inappropriate for them.

Auditors can therefore complement access to trained models with limited and carefully chosen access to the training data, training procedure, and untrained model. Auditors can even adopt a tiered system, where companies of different magnitudes face different access requirements. No matter what route auditors take, they must exercise careful discretion, as to ask for unfettered access is to ask for keys to the AI kingdom.

All four types of access are mutually exclusive (i.e., do not overlap) with one caveat. Namely, full access to the trained model subsumes access to the untrained model. However, as we discuss under Option 4, one can also ask for black-box access to a trained model, in which case all four access types are mutually exclusive.

It is important, however, that training procedure access should not be accompanied by strict rules on the information disclosed. For instance, auditors should not require that AI systems adopt one of a pre-specified set of objective functions, as such requirements can have unintended, harmful effects. There is no “right” set of objective functions, and strict rules often result in trade-offs (e.g., under the given pipeline, the easiest way to satisfy the rule may require sacrificing performance on a rare, but important population).